Our Approach to Data Discovery

Discover the machine learning algorithms, data validation processes, and methodologies we use to identify and track federal dataset usage in research publications.

Three-Stage Research Process

Our comprehensive approach involves three main workflow stages: identifying and finding datasets in publications through advanced machine learning; providing agency and researcher access to information through APIs, Jupyter Notebooks and researcher dashboards; and gathering feedback from the user community to continuously improve our methods.

How We Do It

This project is made possible through the use of cutting-edge machine learning algorithms combined with expert human validation. Our team of expert reviewers validates all outputs to ensure that the results generated are accurate, reliable, and actionable for policy and research decisions.

🔍 Process

Dataset Identification

Advanced machine learning algorithms scan millions of research publications to identify mentions and usage of federal datasets.

📊 Access

Data Dissemination

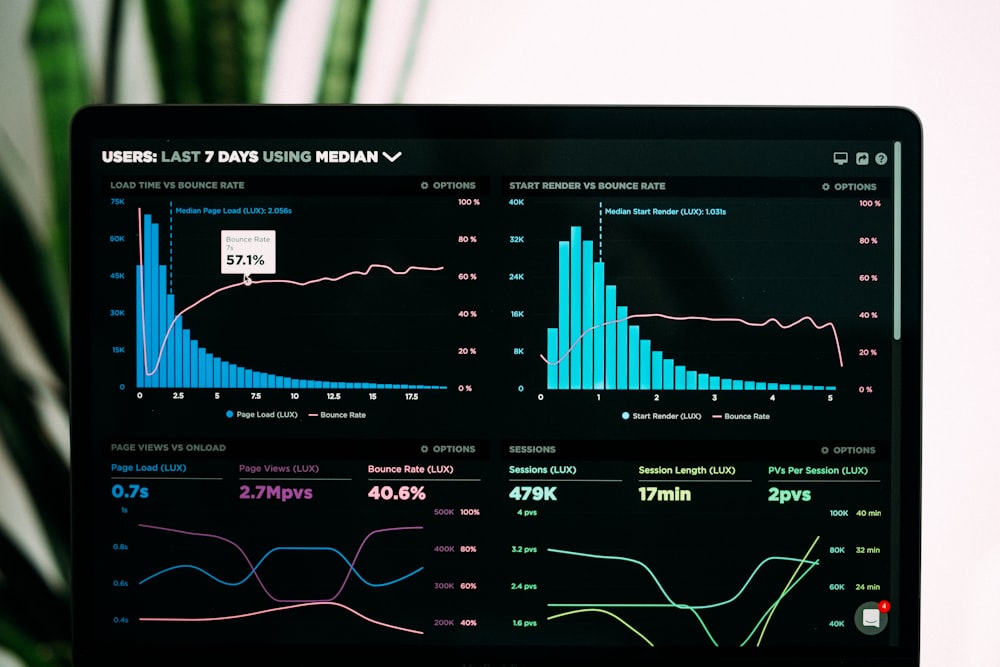

Provide researchers and agencies with accessible tools including APIs, dashboards, and interactive notebooks.

💬 Feedback

Community Input

Continuous improvement through user feedback and expert validation to enhance accuracy and utility.

Machine Learning Algorithms

Three specialized models were developed using the best Machine Learning (ML) and Natural Language Processing (NLP) tools to support the identification of datasets within full-text publications. Each model brings unique strengths to our comprehensive detection system.

🧠 Model 1: Deep Learning - Sentence Context

A deep learning-based approach that learns what kind of sentences contain references to datasets. This model is the most robust to new datasets and evaluates all text within documents to understand contextual usage patterns.

- Strength: Comprehensive text analysis

- Approach: Contextual sentence classification

- Coverage: Full document evaluation

🏷️ Model 2: Deep Learning - Entity Names

A deep learning-based approach that extracts names of entities from text and classifies whether an entity represents a dataset. This model excels at identifying specific dataset names and acronyms.

- Strength: Precise entity recognition

- Approach: Named entity extraction and classification

- Coverage: Dataset name identification

🔍 Model 3: Pattern Matching

A rule-based approach that searches for patterns in documents similar to a curated list of existing datasets. This model provides high precision for known dataset patterns and variations.

- Strength: High precision matching

- Approach: Rule-based pattern recognition

- Coverage: Known dataset variations

Model Integration

These three models work together in an ensemble approach, combining their individual strengths to achieve higher accuracy and coverage than any single model could provide alone. The integration allows us to capture both explicit dataset mentions and implicit usage patterns across diverse research domains.

Data Validation Process

Each agency utilizes our validation tool to ensure that machine learning outputs are accurate and reliable. This human-in-the-loop approach combines automated detection with expert review to maintain high quality standards.

Validation Workflow

The validation tool provides reviewers with snippets from actual publications where our models have identified potential dataset references. Each snippet contains a candidate phrase identified by the model, and expert validators determine if these snippets correctly refer to the target dataset.

📋 Review Process

- Expert reviewers examine model outputs

- Context snippets provide publication evidence

- Binary validation: correct or incorrect

- Feedback improves model performance

🎯 Quality Metrics

- Precision and recall tracking

- Inter-reviewer agreement scores

- Model confidence calibration

- Continuous accuracy monitoring

Validation Outcomes

The validation process delivers three key benefits:

- Model Performance Insights: Provides detailed understanding of how well our models identify datasets across different research domains and publication types.

- Related Dataset Discovery: Identifies other datasets used in conjunction with agency datasets, revealing research ecosystems and data integration patterns.

- Usage Pattern Analysis: Reveals how researchers utilize each agency's data, informing future data collection and dissemination strategies.

Methodology Impact

Our comprehensive approach to dataset identification and validation has enabled federal agencies to better understand the research impact of their data investments. By combining cutting-edge machine learning with expert human validation, we provide reliable insights into how government data supports scientific discovery and evidence-based policy making.

This methodology has been successfully applied across multiple agencies and research domains, demonstrating its versatility and effectiveness in tracking data usage patterns at scale. The continuous feedback loop between automated detection and expert validation ensures that our methods remain accurate and relevant as research practices evolve.